As a native Ohioan, I was thrilled to see the Cleveland Cavaliers complete their remarkable Championship season, ending a 52-year drought for the city.

However, at least one person was somewhat less enthused that Cleveland came out on top in 2016:

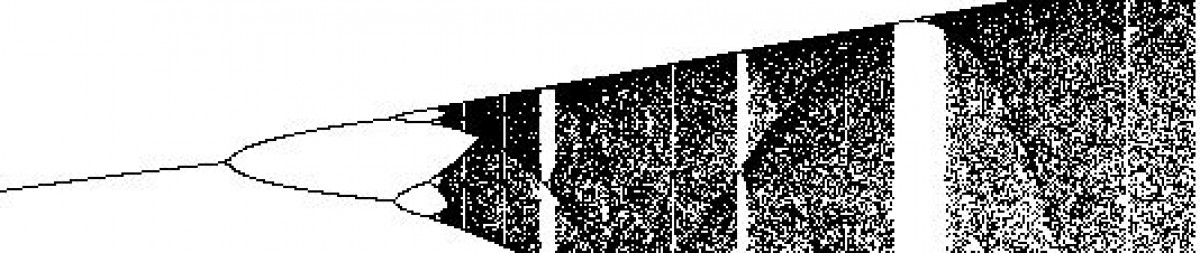

This is not to pick on one pundit in particular; the track-records of sports (and politics) prognosticators are rarely checked, but it should be obvious that a history of accuracy is not a strict requirement to be invited back on TV. To be generous, it may be that the point of making predictions is to summarize one’s current state of belief, in a Bayesian sense. In other words, listeners should hear “pundit X believes, based on all evidence currently available, that outcome Y is the most likely.” Unfortunately, the process of computing this belief is almost always done in an unsystematic manner in the pundit’s head, and therefore subject to numerous cognitive biases, especially the availability heuristic, recency illusion, and the subadditivity bias.

When election guru Nate Silver apologized for being wrong about the GOP primaries, he did so for acting like “a pundit” – almost all of whom, by the way, were also wrong – instead of doing the “data journalism” for which he is famous. He wrote (emphasis added):

The big mistake is a curious one for a website that focuses on statistics. Unlike virtually every other forecast we publish at FiveThirtyEight — including the primary and caucus projections I just mentioned — our early estimates of Trump’s chances weren’t based on a statistical model. Instead, they were what we “subjective odds” — which is to say, educated guesses. In other words, we were basically acting like pundits, but attaching numbers to our estimates. And we succumbed to some of the same biases that pundits often suffer, such as not changing our minds quickly enough in the face of new evidence. Without a model as a fortification, we found ourselves rambling around the countryside like all the other pundit-barbarians, randomly setting fire to things.

So the main “sin” was putting numbers to a guess, which, unlike other 538 predictions, was not based on an actual analysis. Some believe that a way to improve the quality of predictions is to have people put money on the line, as in prediction markets. The idea is that the profit motive will help eliminate inefficiencies, but his is not certain. For example, consider the UK referendum (that has the charming portmanteau “Brexit”) occurring this week on whether to “Remain” part of the European Union or “Leave.” Prediction markets today have “Remain” a 3 to 1 favorite, despite the fact that poll results have gone back and forth and currently rest almost evenly split. Perhaps some punters believe that voters may flirt with leaving, but be blocked by their better judgement at the last moment, as with the Scotland referendum. Or, as King James would put it: